AI Has No Butt To Clench

Or, AI can't own risk

On Feb 26th, Slack went down for 9 hours

As the technical lead for a major project that was going through launch week, my butt was clenched.

While I scrambled to assemble a coordination email, the thought occurred to me: Who else cares? Who feels impacted by the delay caused by the outage? Whose butt is also clenched?

And more cynically: who doesn’t care? Who is treating this as an impromptu vacation day?

Yes, that’s a terribly toxic thing to think about your teammates. But it struck me that especially during an outage, Slack sure looks a hell of a lot like ChatGPT:

The similarities aren’t just visual. In both Slack and ChatGPT, text goes in, text comes out. Sometimes work is done, sometimes someone looks something up, sometimes it’s high quality responses, sometimes it’s low quality, and sometimes you just don’t hear back.

I’m not the first to notice this either. This is the product thesis behind Devin.ai’s agentic coding agent.

And everyone seems to be building something similar: Cursor BugBot, OpenAI Codex, Google's Jules, etc. These companies won’t say the word “replacement”, but you can bet their sales team will certainly imply it.

So then what makes someone irreplaceable by AI? While I was thinking about this, my phone buzzed:

Message: Hey it’s Coworker, found your number in an old doc

Message: What do you think, should we delay launch?

^ This is Coworker, a coworker of mine. Coworker is a significant contributor and stakeholder for the milestones we were launching this week. Coworker is stressed that Slack is down because Coworker is partially accountable if the launch goes poorly. Coworker’s butt is definitely clenched.

AI Has No Butt To Clench

Here is the thesis in a nutshell:

As your leadership grows, so does your risk (accountability for bad outcomes).

As your risk grows, you will offload risk and responsibility to other budding leaders.

AI cannot feel risk, so it cannot own risk. You cannot offload risk to AI.

Thus, to avoid being replaced by AI, you must master the thing it cannot do: owning risk.

What is risk?

Risk isn’t just the big stuff like AI turning the world into paperclips or ordering 4 thousand pounds of meat.

It’s also smaller, personal stakes. Imagine convincing friends to see a concert that turns out to be awful. You feel that pit in your gut because you made the decision.

That’s risk. You cringe at the outcome of your decisions, AI doesn’t.

Think about who is being sued right now? ChatGPT? Nope. You can’t blame ChatGPT. Instead you blame:

You can’t sue ChatGPT because ChatGPT can't be accountable. ChatGPT can’t feel risk, so ChatGPT can’t own risk. ChatGPT can’t clench because ChatGPT has no butt to clench.

Leadership is risk

What is all this butt-clenching about? Good question.

When:

The CEO demos your team’s project

You click the “launch” button

Or something breaks in a big and public way

You can tell who the leaders are (who owns the most risk), by whose butt is most clenched.

Leaders survive by offloading risk

As leaders become more and more senior, they will own more “landscape” (as a student put it), and therefore they will own more risk.

At some point, this becomes untenable. One person cannot be responsible for everything that goes wrong on Airbnb.com.

So how do leaders survive? Typically, they look for budding leaders in their proximity to share the risk and responsibilities with. This grows leadership in others by delegating increasingly larger accountability.

So what happens when you try to offload risk to AI? Well:

AI can’t own risk

AI doesn’t cringe if it gives a bad concert recommendation.

AI doesn’t care if it’s building a demo for the CEO or just for you.

AI doesn’t stress if the button it just clicked was a “launch” vs “test” button.

AI can’t be fired or promoted. It doesn’t worry about whether its peers think it's incompetent. It doesn’t care about its reputation. It doesn’t stay up at night cringing at that dumb thing it said.

AI can’t feel the repercussions for the decisions it makes.

AI can’t feel risk, so AI can’t own risk.

AI can’t clench its butt because AI has no butt to clench.

When you delegate risk to AI, your risk actually amplifies. Not only is it unaccountable for the decisions it makes, but it can make a thousand decisions in the time it takes you to make one. Now you are accountable for those thousand decisions.

Look at where AI is useful today:

Frontend-leaning prototypes to flesh out new ideas and capture new markets

Summarizing information (read-only applications)

Writing tests and building redundancy in systems

Research and deep knowledge aggregation

Suggesting initial ideas to get over the activation energy of starting a hard task

Do you notice the common thread? None of these require any real risk. They delegate the risk to the invoker.

Where does AI fail today?

Real business logic complexity failures

Building anything with significant complexity to existing users at scale

Agentically navigating anything of consequence like personal finances or travel purchases

These are all things that require an owner of risk.

Become an expert in owning risk

Until AI develops a clenchy butt, humans have an advantage.

Humans can own risk

Humans can care about things that matter more than other things

Humans have butts that can clench

So the advice is pretty simple: Seek butt-clenchy opportunities. When you feel that pit in your gut that says “people won’t like me if I make the wrong decision”, lean in.

Put yourself in situations where you are forced to be accountable for the decisions that you make. The only way to learn how to handle risk is to put yourself in situations that feel like they matter.

If you are early in your career, you’re in the reps phase. Make as many decisions as you can, as fast as you can. Find opportunities to feel that pit in your gut. You’ll only learn how to reduce, mitigate, and understand risk when you become comfortable owning it.

Is the junior engineer replaced by AI?

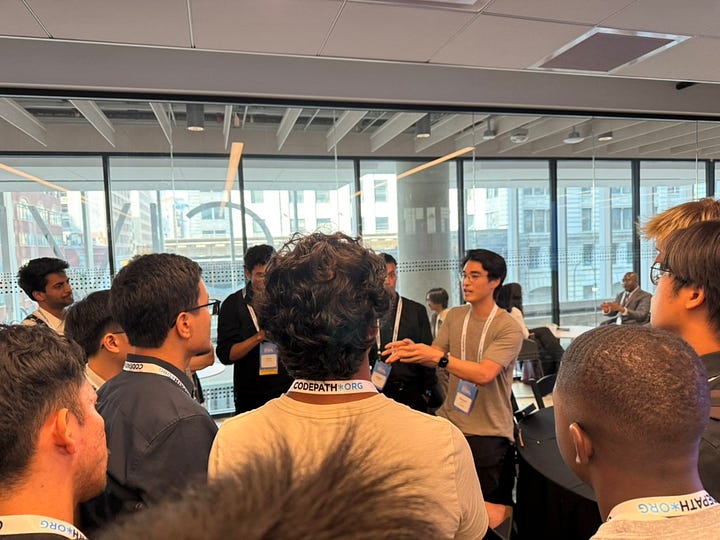

This essay is based on a talk I gave at CodePath.org. The audience was full of young, energetic, and anxious college grads starting to hear that “Jr. engineers will be replaced by AI”. Please excuse the goofy metaphors, they served as a lighthearted and memorable way to anchor the core idea!

If you’re similarly concerned that junior developer jobs are disappearing, I encourage you to take a look at the full presentation here where I have a bit more actionable advice.

Conclusion

I don’t even remember if we delayed the launch on February 26th or not. Isn’t that always how it goes? In the moment, it felt so risky, and yet in hindsight it barely mattered.

Risk doesn’t have to be big to be valuable. The point is to be comfortable feeling and owning it so that you can learn how to handle and mitigate it. You’ll also learn that it’s rarely as critical as it feels in the moment, so when you feel risk, lean in.

To summarize:

AI is going to replace some kinds of work.

But AI cannot own risk.

So you should invest in being an expert risk-owner.

Remember, you’re not an intern.

You’re a Senior Butt Clencher in training

Appendix

“Leadership is risk” as a model isn’t perfect. The best leaders look to reduce risk overall by building redundant systems, testing hypotheses, building a blameless culture, and more.

I totally get that “AI” is a very overloaded term here. In general I am referring to the agentic systems powered by generative AI, but the same could be said for any autonomous system. You can’t sue a GPU, a high frequency trading bot, or a microservice for malfunctioning.

Well written. Comforting and instructive messages like these are salient for the upcoming generation 🫡

This is great advice